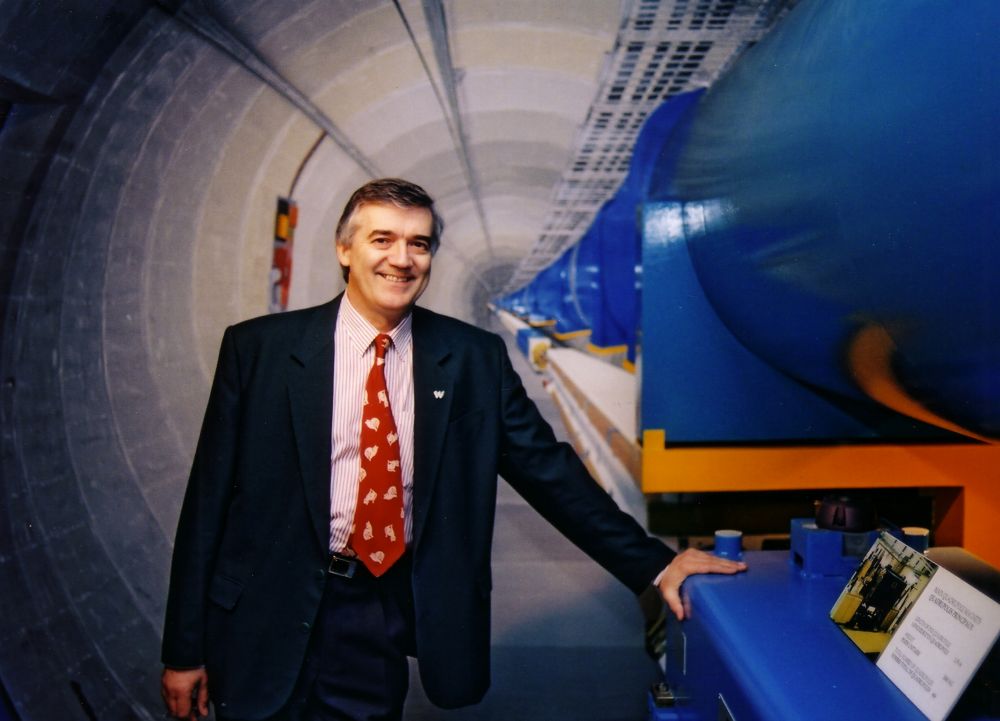

It is a great honor to be joined by Dr. Robert Cailliau, the co-developer of the World Wide Web. Jointly with Sir Tim Berners-Lee, Dr. Cailliau’s work holds a worldwide importance, and their mass appeal invention demonstrates important lessons learned along the way. Furthermore, Dr. Cailliau has a personal fascination with stereoscopic 3D photography, and will be sharing samples of his library on MTBS in a later interview segment.

In part one of our exclusive interview, Dr. Cailliau shares some insights on his career, physics, the beginnings of the World Wide Web, and the significance of beer. As with all our previous interviews, Dr. Cailliau will make himself available to answer member questions.

Robert! Let’s set the record straight. Parlez vous Francais?

Oui, bien sûr. But I was born in Tongeren, just above the horizontal line running through Belgium that was along the Northern end of the Roman Empire, so I am from the Germanic languages region not the Latin languages region. Hence I was brought up in Dutch.

"Flemish" is not a language, just like "Scottish", "Australian" and "Canadian" are slight varieties of English, "Flemish" is a slight variety of Dutch. There is a common Flanders-Netherlands commission that edits the dictionary and spelling that is used commonly in the Dutch speaking region that runs from this horizontal line that passes just under Brussels all the way up to the North of Holland.

But since I live in France, obviously I speak and write French. Quite well I should add.

What does it mean to be synaesthetic, and what does this have to do with the early logo for the WWW?

Synaesthesia is a condition whereby stimuli of one sense generate some trigger of another sense. You could call it "cross-talk" (for those who remember what that was in analog audio/video). So when I think of a symbol (a letter for example) then that triggers a colour. When I think of a "W" I see it in green. The early WWW logo therefore was a set of three overlapping W’s in green shades.

The form I have is very mild and the most common form. Some people "feel" shapes when they smell odours, others get tastes when they hear music and so on. There are about one in ten to twenty thousand people who are synaesthetes. But as most have been put off in early childhood by reactions of parents and friends, most are "in the closet" so to speak and it’s only recently, mainly thanks to the web itself, that they have been able to understand their own condition and make contact with other synaesthetes. So I suspect that we will see a higher number turn up. Most synaesthetes are women, in a ratio of 12 to 1.

Let’s talk about your roots. It’s public record that you were born in Tongeren, Belgium and graduated from Ghent University in electrical and mechanical engineering. You later got your MSc from the University of Michigan in Computer, Information and Control Engineering. I understand you also have a military background! Can you share some of your experiences there?

Let’s set something straight here: in Anglo-Saxon countries an engineering degree is somewhat different from what it is in continental Europe. Here it is equivalent to an MSc already. I did most of my engineering MSc work on electrical stuff, but power transfer. Then I needed to use computers, but no such courses were offered in Ghent at the time (1970) and instead of going to, say, Darmstadt or Zurich, I went to Ann Arbor.

I don’t have a military background: military service was obligatory in Belgium for all able males. I did mine in the infirmary, as some sort of first-aid person. But since I was stationed in the Royal Military Academy, there was also a computing centre. They soon found out I would be much more useful helping with the "War Game" programming than with dispensing medicine to ill cadets and I was transferred to the School of War.

It was the era of the Cold War, remember. There were frequent exercises of the Allied armies in Western Germany. But that was very expensive, so they sent out only the officers and kept the soldiers and equipment in the barracks. We (two programmers in the School of War) maintained a large troop movement simulation program, some sort of SimCity type thing (but text data only) that told the officers the results of their commands. The communication was by telex and paper tape, from the computing centre to the fields in Germany and back. Great fun.

Let’s talk about CERN. What do they do? What makes this institution unique?

There is a very good talk available HERE[/b].

In short, CERN started out in 1954 as a common infrastructure for particle physics research. It is impossible for individual countries, let alone individual universities, to construct the complex, large machines needed to obtain the very high energy densities at which the interesting interactions happen.

The machines are particle accelerators. They take charged particles (mainly protons) and push them to high speeds using electric fields, keeping them in a circular orbit by magnetic fields. Then we let them collide to see what happens at high energy densities. The most powerful accelerator is the Large Hadron Collider (LHC) that lies 100m under the ground at CERN, near Geneva. It is about 10km in diameter.

I have to go a little into some concepts:

Energy is something most are familiar with. It can exist in a potential form, such as the energy in a battery or in a large suspended weight or in water behind a dam. It is measured in Joule (J). It can also be seen to flow, as in a lit bulb. Then it is obviously measured in Joules that flow out per second, or J/s better known as a Watt (W). A Watt is one Joule per second.

Energy density is another matter. It is usually very small, i.e. there are very few Joules in a cubic meter of anything. That is, if you don’t count with the conversion of mass into energy (the famous E=mc2). Of the useful energy stores that we commonly use, the most dense is in petrol: 42,000,000 J/liter and it is extremely cheap (not a joke!). By comparison, a rechargeable battery such as used in your iPod holds only 1,500,000 J/liter (i.e. if you made a battery that would occupy 1 liter of space, it would then weigh more than 4kg instead of the petrol’s 0.8kg, which is why electric cars are heavy and run down fast).

In the experiments we do at CERN, the energy of the particles in the LHC is about that of an entire intercity train running at full speed. A single bunch of particles (there are 2,800 in the beam) has about 124,000 J but it is concentrated in a space smaller than a cubic millimeter. That means just a single bunch holds about 400,000,000,000 J/liter!

When such a bunch collides with another one, you can imagine what happens: interactions at a violence that is close to what was going on at the Big Bang.

And that’s exactly what we’re looking for: how did particles behave at that time, a few microseconds after the Big Bang? Why do we do this? Because different phenomena begin to influence each other when they act at high enough energy densities.

Take electricity: you rub a glass bar with a catskin (poor cat) and the bar starts attracting small objects. That’s static electricity. Take a magnet and it will attract small objects made from iron: static magnetism. Now, put a compass next to a torchlight and switch the torchlight on. If it is powerful enough, the compass needle will get a kick. Moving electric particles generate a magnetic field. There is an influence from the electric stuff to the magnetic stuff (and vice-versa). But it does not happen if you wave the glass bar over the compass, because the energy density is not high enough to see the influence.

Once you have enough energy density you can come up with a description of both the electric and magnetic phenomena in one set of mathematical equations. For electromagnetism those are Maxwell’s famous equations that were first formulated in 1875 and for which we still find new applications each day.

The equations "unified" electricity and magnetism.

When CERN did the Z/W boson experiments in 1983 we had enough energy density to show that electromagnetism and radioactivity are also two phenomena that can be unified into what is now called the electro-weak force.

Can we go on? There are two more forces that we know of: the strong force (that keeps the atomic nucleus together) and gravity.

We have a theory that allows us to describe even the strong force and the electroweak force into a single set of equations: the "standard model". However, the standard model has 18 numbers in it that we don’t know where they come from, and at least one "hole" in its table of particles.

We suspect that the "hole" is taken up by a particle that no-one has yet seen, the so-called Higgs boson. According to the theory, it must be a very heavy particle, and so requires a lot of energy density to create. That’s why we built the LHC.

Should we not detect the Higgs boson, then we have to demolish the standard model and find a different theory. If we do detect it, we hope that its behavior will explain the values of at least some of those 18 numbers.

The numbers, so we suspect, are a little like pi: first you observe that the ratio of the circumference of a circle and its diameter is always the same and about 3. You can obtain a better value of pi (3.14159...) by measuring the circumference of a large circle and dividing it by its diameter. The more accurately you measure, the better your value of pi will be. But when you study the circle and do enough geometry and math, you find that you don’t need to measure pi, you can calculate it from first principles.

Similarly, we suspect that at least some of the 18 numbers should be calculated from other numbers and the Higgs boson properties should help.

That’s my understanding of the physics, but I’m not a physicist.

Wow! How did you fit in all this? Can you give us a brief history of your career at CERN?

I started in the accelerator divisions, doing control systems software for the smaller accelerator that is now used as a first stage for the LHC. That was in 1974. We had Norsk Data computers. Beautiful machines: they already had hardware separation of instructions and data. No way to write a virus and send it off as a piece of data! Also no way a program could mess up its own code and thereby make debugging extremely hard.

We used those machines to write the documentation of our highly specific accelerator control programs, and a friend and I developed a text processing system with interesting relative markup. Tim Berners-Lee used it to write up what he did when he was a contract programmer for a few months in the same project. We did not work close together at that time but I knew who he was.

Tim left CERN and I went to the computing division to become head of Office Computing Systems. My group was responsible for office software and for installing and maintaining a large number of office machines, at the time all Macs (1985-1988). In that capacity I once had dinner with Bill Gates in Zurich, as we wanted a university-type deal for Microsoft’s Excel and Word.

Then in 1989 there was a big restructuring and I wanted to return to more basic things, especially exploring hypertext systems. I left the computing division for a newly created Electronics and Computing for Physics division where I was not sure whether I wanted to work on programming techniques (object oriented programming systems were being introduced) or concentrate on hypertexts. Tim had returned to CERN from a spell in the UK, and his boss, a friend of mine, wanted me to look at his networked hypertext proposal. Tim was in the computing division, in a building more than a kilometer from where I was. I liked very much what he proposed, dropped my own proposal and joined him.

This can all be read in "How the Web was Born" by James Gillies. Click HERE[/b] to learn more.

What was your personal role in this collaboration?

From the start I did more on the management and public relations side. I’m eight years older than Tim who himself was considered "old" by the young programmers. I had a family and I could not work through the night and through the weekends, programming in C. So when I came in on Monday I would usually be a couple of versions behind the others. Plus that I fundamentally hate C. It is one of the lousiest programming languages on the planet.

So I went on to get management support (remember that I had been a group leader for some time and knew my way in the hierarchy). I also wanted to get into the European Commission because it was obvious that CERN, a physics lab, could not afford to put much effort into an informatics project.

I did the first entirely WWW based project with the Commission and the Fraunhofer Gesellschaft in Darmstadt. Then I also forged links with INRIA, a true informatics lab, in the hope to find help there.

In 1993 I started organizing the first international WWW Conference (held in May 1994), and that brought in INRIA.

I worked for six months with the CERN Legal Service to make the document that put the web technology into the public domain on 30 April 1993 (now just 15 years ago!), which required convincing top management.

Then I also convinced the European Commission that the web was an instrument for schools and started the "Web for Schools" initiative that was very successful (though I had only time to follow it from the side). I was quite busy, I can tell you that.

Of that, I’m sure! Do you like beer? Tell us about the most important beer in your life.

I used to like beer, but don’t drink so much these days. There is a quote of mine, a reaction to a question from the floor at a plenary session of the Second International Conference, where a person asked why we had physical conferences on WWW when we should do it all through the internet and the web. I said: "People do want to meet in person, there is no such thing as a virtual beer."

I generally do not like the very light stuff that is popular in warm countries like the US and Australia. I prefer the heavy dark ones. "St Sixtus 13" used to be a favorite but I have not had it in a long time. These beers also require time and proper setting: a quiet long evening after dinner for example. Those seem to have gone too, evaporated into answering e-mail.

But there was another occasion where beer played a (marginal) role: in the early and hot spring of 1990, we were re-writing the proposal for management so that we would get some time to work on the web. We had no good project name and could not find one. Before going home in the evening we used to go to the CERN cafeteria for a light beer after the hot day.

We then discussed the missing name. On one of those evenings, after I had firmly rejected names of mythical characters such as "Zeus", "Pandora" and whatever, that were very popular as project names at the time, Tim proposed "World Wide Web". I liked it, except for the fact that the abbreviation was longer than the name and that "WWW" was unpronounceable in Latin languages. We agreed it would be a temporary name, to be used for the proposal only, until we found something better.

Was the web originally designed for a small group of physicists? Can you explain why your co-invention was so critical to the academic world? What problems were you trying to solve?

No, it was never explicitly intended for physicists. What I had observed was that physicists were sending files by floppy disk in internal mail envelopes and could not get at someone else’s files if the other person was not in the office. There was a need for a storage system that would allow people to find documents without the need of interacting with the author. An automatic, electronic, networked library.

Tim’s idea was somewhat different: he wanted to organize thoughts and grow collaborative documents. That could be useful for physicists, but I think that was only a justification, not a goal.

It was not really a case of trying to solve someone else’s problems but rather a case of trying to understand what was possible and how to do hypertexts over a network. Obviously, once we had it, the linking mechanism allowed academics to build a set of papers with references and that was what they wanted.

The web as we know it has grown beyond measure in both size and functionality. What was the core idea behind the original World Wide Web that Tim and yourself created? What is the significance of hypertext?

The functionalities we see today are no different from the early ones. There still is nothing to link documents other than the simple link we had and nothing to do other than filling in forms and get a reaction from a database. The more "advanced" functions rely on programs that come with the page. They are done in Javascript, a language even worse than C.

Javascript fills the big hole that we left in the web: Tim was adamantly opposed to putting a programming language in. If you leave an obvious hole then it will very quickly be filled and it will be filled with something ugly and badly designed. Javascript was not even designed, it is an abomination.

The functionality of running a program on the server is very old. Even before the first server in the US was implemented (December 1991) there was a server at NIKHEF (Netherlands Insitute for Nuclear (Kern) and High Energy Physics (Fisyca)) that returned the square root of the number you gave it. It showed that one could do essentially anything on the server side.

Hypertext has a lot of functionalities that the web does not have. I can’t go into this here, but the web is arguably the simplest and dumbest hypertext system in existence.

As to the core idea: that simply was to let any document on any server link to any other document on any other server. Nothing more than that. The system depends entirely on the specification of the URL (or URI, as you like).

When you were developing this technology, how big was your team? Did you have an army of teachers and students working with you to make this invention possible? Did you have unlimited resources? (Pay attention to this answer fellow S-3D Advocates!)

We had very limited resources and one of my worries was to get resources where I could. Tim was not very well supported in his division, I was better off, but in total we had one student each at any one time. That grew a little after 1993 and at the end of 1994 we had about four, i.e. the team was 6 people in total.

Inventions of this kind do not need vast armies, just a few bright kids and loads of support: the best network connections, machines, programming techniques, quiet offices and so on. There were of course a number of people working in other places too, after all there were 600 people who wanted to come to the first Conference in May 1994. But most of those did work on the periphery. The core development stayed at CERN until end 1994.

Questioning the usefulness of the World Wide Web today would be like setting aside the merits of sliced bread. How receptive were people to the idea in the early stages? Were your ideas welcomed and adopted with open arms or cold shoulders? How do you think your experiences relate to the S-3D industry?

In Belgium most bread is sold unsliced, but there is a slicing machine in the shop. Bread gets sliced just before you take it away, if you want it sliced that is.

In 1984 I read an article in a computer magazine describing the first Mac and how a mouse worked. It was quite impossible to imagine what a mouse could do from a description, you had to use it yourself to understand. It was much the same with the web. Unless you put people in front of a machine and let them click in web pages, they almost never understood how it worked, much less what its potential was.

The best illustration of this was my attempt at explaining it to the European Commission officer who was in charge of the grant for the Fraunhofer project. After about 10 minutes I gave up and suggested that we would set up a special meeting at which I would show what it could do. To the credit of the Commission, they agreed to hold a grant discussion meeting outside their offices, in the computing centre of the Free University of Brussels.

I purposely showed the then newly created "Dinosaur Exhibition" site of the Honolulu Community College in Hawaii (Kevin Hughes’ work). When the officer began to understand that each time I clicked the text and images actually travelled from Hawaii to the terminal, he took the mouse out of my hand and began to click around himself. But it was not always possible to give such a convincing demo because managers still were reluctant to touch computers and especially ones with a mouse.

At a network conference near Paris I sat down for lunch next to a person from the French Telecom and when I asked if he had an internet mailbox, he dryly answered that I should not assume that the Telecom would ever support the internet protocols.

We know what happened: the internet spread anyway, despite resistance from the telecoms.

In a similar vein, I have not experienced 3D movies or video games, and I think it’s no use talking about them until I have actually seen one. There is no number of words that can describe the real experience, and that is a real obstacle sometimes to spreading a technology.

Tell us about Gopher and Mosaic. What gave them temporary advantages against your work? Were you happy about the development of HTML? Why or why not?

Gopher was there a little before, and by coincidence the basic protocol looked much the same. But then that would not be too surprising: both systems deliver a page of information from a server to a client. Gopher was extremely easy to install and also very easy to populate with information. But its information was a tree, there were no links. Our pages contained addresses of more pages they were linked to. WWW required the insertion by hand of these links and so at least one bit of markup was required too.

The links made it much more useful to the reader: you could see from the context where a link was going to lead you to, and authors could use this to attract attention to pieces of information. Instead of browsing through a tree as in Gopher, in WWW you could follow a path laid out by the author.

The markup we used was inspired by a markup used at CERN for physics papers. There was an SGML guru at CERN, Anders Berglund, who understood that SGML was a good framework for making the different markups that physicists needed.

Let me take the opportunity to point out that HTML is not a subset of SGML, and that in fact you can’t write anything in SGML. SGML is the superstructure that allows one to define a certain markup. The "grammar" of a markup language is technically called a "document type definition" or DTD. You can have a DTD for letters, a different one for reports and so on.

HTML is a very bad name for a very lousy grammar. It should technically have been called "the web DTD". Calling it HTML implies that it is something like SGML but of course it is not. A good analogy is that HTML is to SGML like French is to Indo-European Languages.

SGML has since more or less gone away and is superceded by XML. Again one cannot write a document in XML, but one can write a document in an XML compliant markup. XHTML is an XML compliant markup language.

Back to HTML: apart from the bad acronym, it is also a bad language in itself. It is not well-structured. For example, you can have headings of different importance (h1, h2, ... h6) but there is no concept of chapters, sections and so on that can be nested. It is a really flat set of directives and they are absolute to boot. I could go into many details, but it’s really not good. However, at the time it was a secondary worry. Unfortunately, once a large number of people have written servers full of HTML, it’s impossible to change. There are other examples of this disaster: USB is a bad design but we’re stuck with it. IEEE 1394 (FireWire) is much better.

Once there were a number of servers out there, people needed other browsers than the very fancy one that ran on the NeXT system. We just did not have the manpower nor the knowledge to port the browser to the X system but a number of X programmers did make "primitive" X browsers. I say "primitive" because those browsers did not support authoring, did not allow multiple windows and had no support for laying paths. They did attempt to introduce graphics, but usually bitmap graphics rather than vector graphics.

One of them came from NCSA, the National Center for Supercomputer Applications in Illinois. It was called X-Mosaic and was originally meant to do more integrated information access things. Its main characteristic was that it came as a single executable bundle. You could just download it and start browsing. Until then, the X browsers had all required some installation procedure with linking to local resources such as fonts. X-Mosaic spread like wildfire: it was indeed a virus, no installation required!

That happened in 1993. Soon after there were versions for MacOS and for Windows. NCSA had a team of 20 people on Mosaic. They got very sure of themselves and started inventing additions to HTML. In a sense they were right, but I wished that we could have had a better collaboration. Anyway, the world began to see the web through Mosaic. I think that collapse into Mosaic (later Netscape) set us back by about five years in the implementation of style sheets (we had them on the NeXT) and XML.

When I tell people the co-developer of the World Wide Web is making an appearance on MTBS, like clockwork the first response is "I thought Al Gore invented the web". Can you explain how this impression came to be, and PLEASE set the record straight?

My own understanding of the role of Al Gore is this:

1. From 1973 there was the internet, the infrastructure with NO content that carries the services that are mail, download, chat, and since 1990 the web.

2. During the 80’s, networks became important and there were several attempts at giving people access. The most successful of these was the Minitel in France(*).

3. In the US there was the NII, the National Information Infrastructure initiative in 1991. When we saw it we smiled and thought: “we have that...”, but the guy behind the NII was Al Gore. He did not know about the web then, because the first server in the US only got online in December 1991, but he had the idea right. So, a bit like we said we were actually DOING the NII, he must later have said the web was what he had had in mind with the NII.

You can find all that in the Wikipedia and the book “How the Web Was Born” by James Gillies and myself.

Stay tuned for part two of Dr. Robert Cailliau’s interview. Dr. Cailliau will share his thoughts on the stereoscopic 3D industry and its relationship to the web’s history. Is a stereoscopic 3D world wide web possible and what would it look like? What does the S-3D industry need to reach mass market success? Does our industry need standards, and who should set them?

Post your thoughts on this interview HERE[/b]. Dr. Cailliau will be answering our members’ questions and remarks in a special follow-up interview.

Read full article...