If I'm not mistaking, the shader for distortion correction is applied at the very end of the pipeline, where the 2D scenes have already been rendered. If that's the case, does the shader just expand the image, and interpolate adjacent pixels, or does it actually increase detail with pixel/poly correct detail (sorry, I don't really know the correct terminology)?

Understanding software-side distortion correction

-

MSat

- Golden Eyed Wiseman! (or woman!)

- Posts: 1329

- Joined: Fri Jun 08, 2012 8:18 pm

Understanding software-side distortion correction

I initially asked about this in another post, but it appears to have been overlooked, so I was hoping some of you brainiacs could enlighten me a little

If I'm not mistaking, the shader for distortion correction is applied at the very end of the pipeline, where the 2D scenes have already been rendered. If that's the case, does the shader just expand the image, and interpolate adjacent pixels, or does it actually increase detail with pixel/poly correct detail (sorry, I don't really know the correct terminology)?

If I'm not mistaking, the shader for distortion correction is applied at the very end of the pipeline, where the 2D scenes have already been rendered. If that's the case, does the shader just expand the image, and interpolate adjacent pixels, or does it actually increase detail with pixel/poly correct detail (sorry, I don't really know the correct terminology)?

-

Owen

- Cross Eyed!

- Posts: 182

- Joined: Mon Aug 13, 2012 5:21 pm

Re: Understanding software-side distortion correction

Actually it shrinks the image slightly. It has the effect of pinching the corners inward while the center of the image is mostly unaffected.

-

MSat

- Golden Eyed Wiseman! (or woman!)

- Posts: 1329

- Joined: Fri Jun 08, 2012 8:18 pm

Re: Understanding software-side distortion correction

The optics in the Rift compress the image towards the center, which seems to be what you're talking about Owen. To account for this distortion by the time it hits the viewer's eyes, the software stretches image to counteract it.

I'm asking this because Palmer states that the pixel density is higher looking through the center of the optics than at the edges. If the shader used to correct the distortion only interpolates adjacent pixel data, then the level of detail available in the higher ppi area (of the optics, not lcd obviously) is not being utilized in the most effective way. If I'm not mistaking, you would actually need to account for distortion when you're rendering the scene, and not do it as a post-processing effect.

I'm asking this because Palmer states that the pixel density is higher looking through the center of the optics than at the edges. If the shader used to correct the distortion only interpolates adjacent pixel data, then the level of detail available in the higher ppi area (of the optics, not lcd obviously) is not being utilized in the most effective way. If I'm not mistaking, you would actually need to account for distortion when you're rendering the scene, and not do it as a post-processing effect.

-

druidsbane

- Binocular Vision CONFIRMED!

- Posts: 237

- Joined: Thu Jun 07, 2012 8:40 am

- Location: New York

- Contact:

Re: Understanding software-side distortion correction

I think it can be a post-processing effect, and my guess (at least what I'll try) is that you want to start with a higher resolution surface then post-process it to the lower res of the Rift, or possibly the MSAA thingy takes care of that detail to make sure we have more pixels in the center. If you distort purely the original image at the resolution of the rift you'll gain no extra detail b/c you didn't stretch more pixels and distort them outward so that when it gets redistorted down they are sharper.MSat wrote:If I'm not mistaking, you would actually need to account for distortion when you're rendering the scene, and not do it as a post-processing effect.

Ibex 3D VR Desktop for the Oculus Rift: http://hwahba.com/ibex - https://bitbucket.org/druidsbane/ibex

-

MSat

- Golden Eyed Wiseman! (or woman!)

- Posts: 1329

- Joined: Fri Jun 08, 2012 8:18 pm

Re: Understanding software-side distortion correction

druidsbane wrote:I think it can be a post-processing effect, and my guess (at least what I'll try) is that you want to start with a higher resolution surface then post-process it to the lower res of the Rift, or possibly the MSAA thingy takes care of that detail to make sure we have more pixels in the center. If you distort purely the original image at the resolution of the rift you'll gain no extra detail b/c you didn't stretch more pixels and distort them outward so that when it gets redistorted down they are sharper.MSat wrote:If I'm not mistaking, you would actually need to account for distortion when you're rendering the scene, and not do it as a post-processing effect.

Ok.. I can see how applying a post processing effect to a scene rendered above the native display resolution would work. Sounds like the simplest method, but perhaps not the most efficient?

- cybereality

- 3D Angel Eyes (Moderator)

- Posts: 11407

- Joined: Sat Apr 12, 2008 8:18 pm

Re: Understanding software-side distortion correction

What I am doing with my driver is taking a normal full resolution render (ie 720P) and warping it inward a little in a final pixel-shader. The center has almost no warping and as you get closer to the sides it gets more warped in. The corners do not have real data, they are just stretched versions of the pixels on the edge. I think Dycus tried this out with the Rift and he said it looked almost perfect. This was based on an image that John Carmack posted, so it should be similar to what people have seen in the live demos. I have not tried it myself with the Rift, but hopefully it will look good enough.

-

MSat

- Golden Eyed Wiseman! (or woman!)

- Posts: 1329

- Joined: Fri Jun 08, 2012 8:18 pm

Re: Understanding software-side distortion correction

cybereality wrote:What I am doing with my driver is taking a normal full resolution render (ie 720P) and warping it inward a little in a final pixel-shader. The center has almost no warping and as you get closer to the sides it gets more warped in. The corners do not have real data, they are just stretched versions of the pixels on the edge. I think Dycus tried this out with the Rift and he said it looked almost perfect. This was based on an image that John Carmack posted, so it should be similar to what people have seen in the live demos. I have not tried it myself with the Rift, but hopefully it will look good enough.

Ah, ok - this makes sense and sounds good too. I had been thinking the center needed to be stretched out, instead of warping the periphery inwards. Thanks for the examples, guys!

-

MSat

- Golden Eyed Wiseman! (or woman!)

- Posts: 1329

- Joined: Fri Jun 08, 2012 8:18 pm

Re: Understanding software-side distortion correction

Another question (I suppose no one but the insiders might know this) - Are more pixels squeezed towards the middle of the optics, or is it just that the central pixels are roughly kept in place, and the periphery is stretched out?

- Dycus

- Binocular Vision CONFIRMED!

- Posts: 322

- Joined: Wed Aug 15, 2012 1:38 pm

- Contact:

Re: Understanding software-side distortion correction

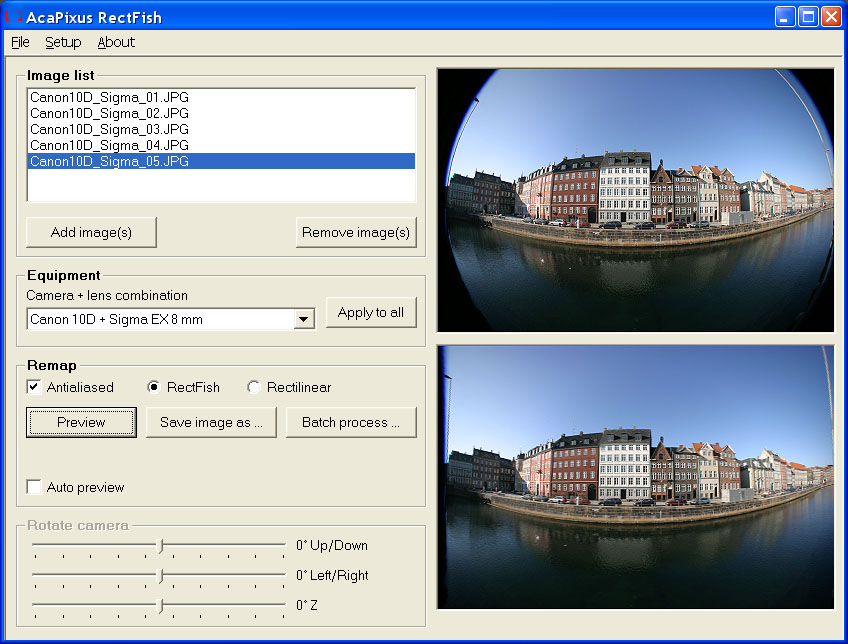

This is a quick photoshop approximation of what the optics do:

Both, really. The center is made more dense and the outer pixels are stretched a bit.

Both, really. The center is made more dense and the outer pixels are stretched a bit.

- mahler

- Sharp Eyed Eagle!

- Posts: 401

- Joined: Tue Aug 21, 2012 6:51 am

Re: Understanding software-side distortion correction

This is mesmerizingDycus wrote:This is a quick photoshop approximation of what the optics do:

<<Img>>

Both, really. The center is made more dense and the outer pixels are stretched a bit.

- brantlew

- Petrif-Eyed

- Posts: 2221

- Joined: Sat Sep 17, 2011 9:23 pm

- Location: Menlo Park, CA

Re: Understanding software-side distortion correction

Thanks Dycus. Have you viewed this image through a Rift or is this just a guess?

-

MSat

- Golden Eyed Wiseman! (or woman!)

- Posts: 1329

- Joined: Fri Jun 08, 2012 8:18 pm

Re: Understanding software-side distortion correction

Thanks for the response, Dycus

This leaves me wondering what the implications are in rendering parts of the scene that will be placed in the compressed pixel region, in order to maximize detail and sharpness. Very interesting!

BTW, will the Rift have an RF modulated input for NES support?

This leaves me wondering what the implications are in rendering parts of the scene that will be placed in the compressed pixel region, in order to maximize detail and sharpness. Very interesting!

BTW, will the Rift have an RF modulated input for NES support?

- Dycus

- Binocular Vision CONFIRMED!

- Posts: 322

- Joined: Wed Aug 15, 2012 1:38 pm

- Contact:

Re: Understanding software-side distortion correction

Brant - Naw, I just whipped it up with no regard for accuracy. It's just an illustration, sorry!

MSat - Oh yeah, we're totally gonna have NES support. In fact, I'm working on a custom video chip for it right now so it splits the image for the Rift.

MSat - Oh yeah, we're totally gonna have NES support. In fact, I'm working on a custom video chip for it right now so it splits the image for the Rift.

-

EdZ

- Sharp Eyed Eagle!

- Posts: 425

- Joined: Sat Dec 22, 2007 3:38 am

Re: Understanding software-side distortion correction

Wait, is that what happens to a linear grid viewed through the RIFT's optics, or what would need to be displayed in order to see a linear grid through the optics?Dycus wrote:This is a quick photoshop approximation of what the optics do:

Both, really. The center is made more dense and the outer pixels are stretched a bit.

-

Endothermic

- Binocular Vision CONFIRMED!

- Posts: 284

- Joined: Tue Jun 26, 2012 2:50 am

Re: Understanding software-side distortion correction

That is what the RIFT lenses do to the image, so if you viewed a perfectly square grid through the RIFT it would look like that.EdZ wrote: Wait, is that what happens to a linear grid viewed through the RIFT's optics, or what would need to be displayed in order to see a linear grid through the optics?

This is what needs to be displayed in the RIFT so that after the lenses do that warping it looks normal. Of course it may need to be warped inward stronger depending on the lenses they finally end up using.

-

TheLookingGlass

- Two Eyed Hopeful

- Posts: 73

- Joined: Fri Aug 31, 2012 6:22 am

Re: Understanding software-side distortion correction

Sadly I spent way too many minutes trying to figure out if you were serious or not. Minutes are precious.Dycus wrote:Brant - Naw, I just whipped it up with no regard for accuracy. It's just an illustration, sorry!

MSat - Oh yeah, we're totally gonna have NES support. In fact, I'm working on a custom video chip for it right now so it splits the image for the Rift.

-

MSat

- Golden Eyed Wiseman! (or woman!)

- Posts: 1329

- Joined: Fri Jun 08, 2012 8:18 pm

Re: Understanding software-side distortion correction

Any chance Oculus may grace us with some more technical details (such as an accurate distortion map) prior to the release of the SDK? PRETTTY PLEEEEAASEEE!

- Dycus

- Binocular Vision CONFIRMED!

- Posts: 322

- Joined: Wed Aug 15, 2012 1:38 pm

- Contact:

Re: Understanding software-side distortion correction

If we could possibly get one, maybe.  I'd have to ask Palmer, but maybe. I'm not sure how we'd make one (I could probably rig something up with lasers), but I'm not sure if we want the public to have it yet. I'll ask him for you.

I'd have to ask Palmer, but maybe. I'm not sure how we'd make one (I could probably rig something up with lasers), but I'm not sure if we want the public to have it yet. I'll ask him for you.

-

MSat

- Golden Eyed Wiseman! (or woman!)

- Posts: 1329

- Joined: Fri Jun 08, 2012 8:18 pm

Re: Understanding software-side distortion correction

I know there was some mention of custom optics, and whatnot, so I wouldn't be surprised if such a map doesn't even exist yet. There's no rush.  I'm just hoping we can get a little info when it's available, assuming such parameters will need to be known in order for you guys to develop the SDK anyway.

I'm just hoping we can get a little info when it's available, assuming such parameters will need to be known in order for you guys to develop the SDK anyway.

- Fredz

- Petrif-Eyed

- Posts: 2255

- Joined: Sat Jan 09, 2010 2:06 pm

- Location: Perpignan, France

- Contact:

Re: Understanding software-side distortion correction

What about taking a photo of a grid through the optics of one eye with a 90° or 110° FOV camera ? Using this it should be quite straightforward to write an algorithm that does the inverse operation.

-

Owen

- Cross Eyed!

- Posts: 182

- Joined: Mon Aug 13, 2012 5:21 pm

Re: Understanding software-side distortion correction

Given the known (to them) geometry of the lenses, an exact analytic formula for the distortion should be straightforward to calculate.

A numeric solution using real measurements would be more expensive to evaluate and probably not even as accurate as it would be inherently an approximation subject to sampling error. It would also be harder to make it parametric with respect to pupillary distance, which it may have to be unless the eyepieces are adjustable.

A numeric solution using real measurements would be more expensive to evaluate and probably not even as accurate as it would be inherently an approximation subject to sampling error. It would also be harder to make it parametric with respect to pupillary distance, which it may have to be unless the eyepieces are adjustable.

-

MaterialDefender

- Binocular Vision CONFIRMED!

- Posts: 262

- Joined: Wed Aug 29, 2012 12:36 pm

Re: Understanding software-side distortion correction

I would highly encourage everyone to read the Wikipedia article about lens distortion. It has everything you need to write a configurable general purpose distortion correction shader. 1st order barrel distortion will most likely be enough, 2nd order distortion might be of some use, higher orders are most likely irrelevant. Many programs used for 3d animations and in video post-production work with 1st order distortion only and that is almost always good enough (usually with less dramatic distortion though).

If highest speed is your goal (I bet it is) the equation can be simplified significantly by leaving out the tangential distortion part. For the best possible image quality you may want to introduce a zoom factor into your algorithm to counteract the inherent minification. This way you won't have to scale up the ouput image.

The Wikipedia article on chromatic aberration is quite interesting too, it makes pretty clear why this is a much harder to solve issue.

Edit: edited for clarity, some additions

If highest speed is your goal (I bet it is) the equation can be simplified significantly by leaving out the tangential distortion part. For the best possible image quality you may want to introduce a zoom factor into your algorithm to counteract the inherent minification. This way you won't have to scale up the ouput image.

The Wikipedia article on chromatic aberration is quite interesting too, it makes pretty clear why this is a much harder to solve issue.

Edit: edited for clarity, some additions

-

alexs

- One Eyed Hopeful

- Posts: 9

- Joined: Sat Sep 08, 2012 6:42 pm

Re: Understanding software-side distortion correction

The best way to do the distortion correction is to do a non-planar projection. That way you don't need a post-process. To give you an example, have a look at Fisheye Quake: http://strlen.com/gfxengine/fisheyequake/compare.html

In reality, though, planar projection is so much simpler and nicely optimal for our rendering hardware. So it's easier to render it using the traditional way and then post-process the image. This does mean that the pixels will not end up the same size. And yes, you are undoing the increased pixel density.

There are some methods of getting around this. For example, you could render at higher resolution (like MSAA does) and then you have the detail to work with. Or, you could do a multi-view render at different resolutions. Or there are a few other ways. In the end it comes down to a trade off between the speed of your rendering versus the extra detail.

In reality, though, planar projection is so much simpler and nicely optimal for our rendering hardware. So it's easier to render it using the traditional way and then post-process the image. This does mean that the pixels will not end up the same size. And yes, you are undoing the increased pixel density.

There are some methods of getting around this. For example, you could render at higher resolution (like MSAA does) and then you have the detail to work with. Or, you could do a multi-view render at different resolutions. Or there are a few other ways. In the end it comes down to a trade off between the speed of your rendering versus the extra detail.

-

MaterialDefender

- Binocular Vision CONFIRMED!

- Posts: 262

- Joined: Wed Aug 29, 2012 12:36 pm

Re: Understanding software-side distortion correction

Fisheye Quake is quite interesting, though it does not do any real non-planar projection at all. It renders six views with a 90° fov, effectively creating a cubemap, and stitches those six views together in a post process. Pretty much the same way like cubemap based panorama viewers (Quicktime VR etc.) do. Doing something like this would be a performance hog of epic proportions compared to rendering just one view and distorting it. This doesn't really make sense until you have much higer FOVs to cover than the current Rift does.

Rendering the initial render target at a slightly higher resolution before applying a correction shader seems the best way to achieve optimal quality to me. Guessing from what one could see so far of the Rifts distortion strength it won't even have to be that much higher. Maybe factor 1.3-1.5.

Rendering the initial render target at a slightly higher resolution before applying a correction shader seems the best way to achieve optimal quality to me. Guessing from what one could see so far of the Rifts distortion strength it won't even have to be that much higher. Maybe factor 1.3-1.5.

- Fredz

- Petrif-Eyed

- Posts: 2255

- Joined: Sat Jan 09, 2010 2:06 pm

- Location: Perpignan, France

- Contact:

Re: Understanding software-side distortion correction

I don't see the point of rendering at a higher resolution. From what I understand the pixel proportion should be conserved in the center (1:1) and shrinked in the periphery, depending on the distance to the center. Using a higher resolution would be wasted since the destination resolution would be equal or inferior to the source resolution. The key would be to implement a good subsampling algorithm for the periphery I guess. Did I get something wrong ?

-

MaterialDefender

- Binocular Vision CONFIRMED!

- Posts: 262

- Joined: Wed Aug 29, 2012 12:36 pm

Re: Understanding software-side distortion correction

That is my understanding too, but since the process shrinks the image in whole, it makes sense to introduce some zooming or (very bad!From what I understand the pixel proportion should be conserved in the center (1:1) and shrinked in the periphery, depending on the distance to the center.

I have to admit though that this might not be the most important thing in the world.

- Fredz

- Petrif-Eyed

- Posts: 2255

- Joined: Sat Jan 09, 2010 2:06 pm

- Location: Perpignan, France

- Contact:

Re: Understanding software-side distortion correction

What I don't understand is why would this process need to shrink the image in whole. I see it like it's show in this capture :MaterialDefender wrote:That is my understanding too, but since the process shrinks the image in whole, it makes sense to introduce some zooming or (very bad!) upscale the image afterwards.

The image is rendered as usual with a rectilinear projection, but using a 90° or 110° FOV (bottom image). Then the warping transforms the bottom image to obtain the image on the top.

Downsampling from a higher resolution rendering would be a little bit more effective than doing it from the original image, but that would have an impact only in the corners mostly I think. For the most important part of the image we would have something close to a 1:1 mapping and almost no subsampling done.

Also I think there would be so much distortion due to the optics in the corners that the enhanced sharpness would mostly go unnoticeable. And I guess that's not a zone where the eyes would look naturally, they'd probably be concentrated in a much lower FOV. These zones would mostly serve as secondary cues, for which resolution isn't really important.

-

MaterialDefender

- Binocular Vision CONFIRMED!

- Posts: 262

- Joined: Wed Aug 29, 2012 12:36 pm

Re: Understanding software-side distortion correction

Hard to tell what exactly they do in this program just from screenshots. My current algorithm is modeled after the formula from the above mentioned Wikipedia article, which seems to be a common solution. And it does shrink the image. Not by much, but enough to make introducing some zoom/scaling a sensible decision.

- Fredz

- Petrif-Eyed

- Posts: 2255

- Joined: Sat Jan 09, 2010 2:06 pm

- Location: Perpignan, France

- Contact:

Re: Understanding software-side distortion correction

Ah ok, I'll need to investigate that then, I didn't write my warping shader yet.

-

MaterialDefender

- Binocular Vision CONFIRMED!

- Posts: 262

- Joined: Wed Aug 29, 2012 12:36 pm

Re: Understanding software-side distortion correction

Here is a little picture illustrating the effect. The above image is the 'pure' distortion, the below image is distortion with zoom.

I took a few shortcuts for speed reasons, but I don't think any of them introduced the shrinking as an error. I'm 99% sure that this is inherent to the algorithm itself.

I took a few shortcuts for speed reasons, but I don't think any of them introduced the shrinking as an error. I'm 99% sure that this is inherent to the algorithm itself.

You do not have the required permissions to view the files attached to this post.

-

MSat

- Golden Eyed Wiseman! (or woman!)

- Posts: 1329

- Joined: Fri Jun 08, 2012 8:18 pm

Re: Understanding software-side distortion correction

From the way it sounds, there isn't a 1:1 pixel representation towards the center, but rather the pixels are compressed. If you render the scene in the native resolution, you'll have to warp the center outwards (losing detail where it's most noticeable), while warping the periphery inwards. Rendering the scene at a higher resolution , and then running it thorough warping-mediated downscaling algorithm is likely the most efficient method to get a good balance of detail and performance. Non-planar projection would probably be the most ideal, but I'm guessing DX and OpenGL doesn't support this.

- cybereality

- 3D Angel Eyes (Moderator)

- Posts: 11407

- Joined: Sat Apr 12, 2008 8:18 pm

Re: Understanding software-side distortion correction

Here is how the warping looks in my driver.

Notice how the extreme corner have stretched pixels, but the vast majority of the image looks crisp with nice detail. Have not tried this on the Rift, but I think it will look good enough.

Notice how the extreme corner have stretched pixels, but the vast majority of the image looks crisp with nice detail. Have not tried this on the Rift, but I think it will look good enough.

You do not have the required permissions to view the files attached to this post.

-

alexs

- One Eyed Hopeful

- Posts: 9

- Joined: Sat Sep 08, 2012 6:42 pm

Re: Understanding software-side distortion correction

@MaterialDefender you're right about how fisheye quake implemented it. i was hoping to use the images more to illustrate the concept.

@Fredz I think people say it "shrinks" the image as a whole because of the way the Rift will use a single panel with the optics for each eye. So there will be dead zones where the optics can't see?

@Fredz I think people say it "shrinks" the image as a whole because of the way the Rift will use a single panel with the optics for each eye. So there will be dead zones where the optics can't see?

- Fredz

- Petrif-Eyed

- Posts: 2255

- Joined: Sat Jan 09, 2010 2:06 pm

- Location: Perpignan, France

- Contact:

Re: Understanding software-side distortion correction

Ok, I think I get it now.

Consider this schema of the 1D case showing the horizontal line in the middle of the screen, ie. line (0, 400) to (639, 400) in a 640x800 viewport :

If we use a rectilinear projection using a 90° horizontal FOV, the mapping to curvilinear projection should do the following transformation :

- (0, 400) -> (0, 400) ;

- (187.5, 400) -> (160, 400) ;

- (320, 400) -> (320, 400) ;

- (452.5, 400) -> (480, 400) ;

- (639, 400) -> (639, 400).

In this case, the horizontal line in the middle of the screen is preserved by the warping, but you loose resolution in the center. Extending 132.5 points to 160 may not look good on a low resolution display, so a higher resolution rendering would be needed.

It could still be possible to use the native image and find a good pixel correspondance between rectilinear and curvilinear, using an average of several pixels in the source image to map them into a single one in the destination image. Using some sort of look-up tables should be quite fast for this I suppose and the end result may look acceptable.

Now I need to calculate what the dimensions of the higher resolution image should be and implement that.

You nailed it right MSat, I should have waited for answers instead of trying to draw a schema to understand the problem...

Consider this schema of the 1D case showing the horizontal line in the middle of the screen, ie. line (0, 400) to (639, 400) in a 640x800 viewport :

If we use a rectilinear projection using a 90° horizontal FOV, the mapping to curvilinear projection should do the following transformation :

- (0, 400) -> (0, 400) ;

- (187.5, 400) -> (160, 400) ;

- (320, 400) -> (320, 400) ;

- (452.5, 400) -> (480, 400) ;

- (639, 400) -> (639, 400).

In this case, the horizontal line in the middle of the screen is preserved by the warping, but you loose resolution in the center. Extending 132.5 points to 160 may not look good on a low resolution display, so a higher resolution rendering would be needed.

It could still be possible to use the native image and find a good pixel correspondance between rectilinear and curvilinear, using an average of several pixels in the source image to map them into a single one in the destination image. Using some sort of look-up tables should be quite fast for this I suppose and the end result may look acceptable.

Now I need to calculate what the dimensions of the higher resolution image should be and implement that.

You nailed it right MSat, I should have waited for answers instead of trying to draw a schema to understand the problem...

-

MaterialDefender

- Binocular Vision CONFIRMED!

- Posts: 262

- Joined: Wed Aug 29, 2012 12:36 pm

Re: Understanding software-side distortion correction

On the contrary. I think your drawing shows exactly what happens (at least with the Brown distortion model from Wikipedia, other methods might do something else of course). Thanks for the effort. 1:1 pixel mapping in the center and even better than 1:1 the more you get to the outside. As a result of this you get a somewhat smaller output image that has to be accounted for by upscaling/zooming, which creates the need for the higher res input to preserve the 1:1 ratio in the center.I should have waited for answers instead of trying to draw a schema to understand the problem...

My guess for a reasonable higher res input is something around factor 1.3, depending on the distortion strength. 1.5 if you want to be on the really safe side. The image I posted uses a 1.16 zoom/upscale, 1.3 would be more than enough in this case.

- Fredz

- Petrif-Eyed

- Posts: 2255

- Joined: Sat Jan 09, 2010 2:06 pm

- Location: Perpignan, France

- Contact:

Re: Understanding software-side distortion correction

Ok, I've tried to calculate what the dimensions of the rendering viewport should be to not loose any resolution in the end. Not sure if I got that right, feel free to correct me if you think there is an error.

For each half screen we've got a 90x110° FOV and a 640x800 resolution. The center pixel viewed through the optics subtends an angle of 90°/640 = 0.140625°. With the formula X = D x tan(angle) of the previous schema, it gives a value of ~0.7853 for X and ~0.956 for Y (using a 110° FOV for 800 pixels).

So the 1x1 pixel closest to the center viewed through the optics corresponds to a 0.7853x0.956 resolution on the display. To not loose any resolution, we need a viewport of (640/0.7853)x(800/0.956) = 815x833 pixels. That's x1.2732 in the horizontal resolution (very close to your 1.3 estimated factor) and x1.0417 in the vertical one.

So viewing a warped image without loosing detail on a 1280x800 display with a FOV of 90x110° requires a virtual display with a 1630x833 resolution. I guess it'll still be necessary to interpolate several pixels in the source to render one pixel in the destination to avoid aliasing effects though.

For each half screen we've got a 90x110° FOV and a 640x800 resolution. The center pixel viewed through the optics subtends an angle of 90°/640 = 0.140625°. With the formula X = D x tan(angle) of the previous schema, it gives a value of ~0.7853 for X and ~0.956 for Y (using a 110° FOV for 800 pixels).

So the 1x1 pixel closest to the center viewed through the optics corresponds to a 0.7853x0.956 resolution on the display. To not loose any resolution, we need a viewport of (640/0.7853)x(800/0.956) = 815x833 pixels. That's x1.2732 in the horizontal resolution (very close to your 1.3 estimated factor) and x1.0417 in the vertical one.

So viewing a warped image without loosing detail on a 1280x800 display with a FOV of 90x110° requires a virtual display with a 1630x833 resolution. I guess it'll still be necessary to interpolate several pixels in the source to render one pixel in the destination to avoid aliasing effects though.

Last edited by Fredz on Tue Sep 11, 2012 6:07 pm, edited 1 time in total.

- MrGreen

- Diamond Eyed Freakazoid!

- Posts: 741

- Joined: Mon Sep 03, 2012 1:36 pm

- Location: QC, Canada

-

MSat

- Golden Eyed Wiseman! (or woman!)

- Posts: 1329

- Joined: Fri Jun 08, 2012 8:18 pm

Re: Understanding software-side distortion correction

Fredz wrote:Ok, I've tried to calculate what the dimensions of the rendering viewport should be to not loose any resolution in the end. Not sure if I got that right, feel free to correct me if you think there is an error.

For each half screen we've got a 90x110° FOV and a 640x800 resolution. The center pixel viewed through the optics subtends an angle of 90°/640 = 0.140625°. With the formula X = D x tan(angle) of the previous schema, it gives a value of ~0.7853 for X and ~0.956 for Y (using a 110° FOV for 800 pixels).

So the 1x1 pixel closest to the center viewed through the optics corresponds to a 0.7853x0.956 resolution on the display. To not loose any resolution, we need a viewport of (640/0.7853)x(800/0.956) = 815x833 pixels. That's x1.2732 in the horizontal resolution (very close to your 1.3 estimated factor) and x1.0417 in the vertical one.

So viewing a warped image without loosing detail on a 1280x800 display with a FOV of 90x110° requires a virtual display with a 1630x833 resolution. I guess it'll still be necessary to interpolate several pixels in the source to render one pixel in the destination to avoid aliasing effects though.

Maybe I'm missing something, but it looks like your calculations are for evenly spaced pixels across the whole FOV. The size of the pixels towards the center are likely to be smaller, so you would have to render at a higher resolution.

-

MaterialDefender

- Binocular Vision CONFIRMED!

- Posts: 262

- Joined: Wed Aug 29, 2012 12:36 pm

Re: Understanding software-side distortion correction

MSat has a point, but since the center is the worst case, you can omit that, like you did. The worst case is the important one for the magnification.

But: while your drawing shows the (simplified) principle, the radius of your 'lens-circle' is definitely far off. That is a critical factor, the required scaling depends on the distortion strength. You have no chance to calculate this without knowing the charecteristics of the lens. And: real lens distortion is more complex. I might sound like a broken record , but read the Wikipedia article, and then some papers on the Brown model, if you like. No need to reinvent the wheel, the current one is perfectly round.

, but read the Wikipedia article, and then some papers on the Brown model, if you like. No need to reinvent the wheel, the current one is perfectly round.

In your actual implementation you will get away with a simplified version though. First and second order distortion will be enough, most likely even first order alone (which will save you some relatively computationally intense square root calculation in your shader), also it's highly likely that you can omit tangential distortion completely. Then experiment. Let your eye be the judge. Due to the inherently somewhat blurry texture sampling in your shader it will not matter whether you got the scaling factor 100% correct anyway. And some slight oversampling does never hurt, if you can afford it performance wise.

But: while your drawing shows the (simplified) principle, the radius of your 'lens-circle' is definitely far off. That is a critical factor, the required scaling depends on the distortion strength. You have no chance to calculate this without knowing the charecteristics of the lens. And: real lens distortion is more complex. I might sound like a broken record

In your actual implementation you will get away with a simplified version though. First and second order distortion will be enough, most likely even first order alone (which will save you some relatively computationally intense square root calculation in your shader), also it's highly likely that you can omit tangential distortion completely. Then experiment. Let your eye be the judge. Due to the inherently somewhat blurry texture sampling in your shader it will not matter whether you got the scaling factor 100% correct anyway. And some slight oversampling does never hurt, if you can afford it performance wise.

- Fredz

- Petrif-Eyed

- Posts: 2255

- Joined: Sat Jan 09, 2010 2:06 pm

- Location: Perpignan, France

- Contact:

Re: Understanding software-side distortion correction

Yes, that's how I interpreted this as well, I just wanted to calculate the minimal viewport size to not loose resolution after warping. Considering only the closest pixel to the center seemed to be enough for this.MaterialDefender wrote:MSat has a point, but since the center is the worst case, you can omit that, like you did. The worst case is the important one for the magnification.

The radius drawing was just to illustrate the principle, I won't base my calculations on this schema. I'll read the Wikipedia entry and the articles from Paul Bourke, but I needed to understand the limitations of more straightforward techniques before doing so.MaterialDefender wrote:But: while your drawing shows the (simplified) principle, the radius of your 'lens-circle' is definitely far off. [...] I might sound like a broken record, but read the Wikipedia article, and then some papers on the Brown model, if you like. No need to reinvent the wheel, the current one is perfectly round.

I think I'll try to implement a correct solution outside of a shader for a start with just pure C code and using known algorithms. Then I'll calculate look-up tables from this to simplify the shader code and have good performance. I suppose it is possible, any opinion on this ?MaterialDefender wrote:In your actual implementation you will get away with a simplified version though. First and second order distortion will be enough, most likely even first order alone (which will save you some relatively computationally intense square root calculation in your shader), also it's highly likely that you can omit tangential distortion completely.

If the results are not convincing I'll try to do it with ray tracing, using surface equations for aspheric lenses (Wikipedia is my friend there also) and taking into account their refractive index (maybe for the R, G, B components also to account for chromatic abberation). That could then be extended to multiple lenses like in LEEP arrangements.